6. Schedules of Reinforcement

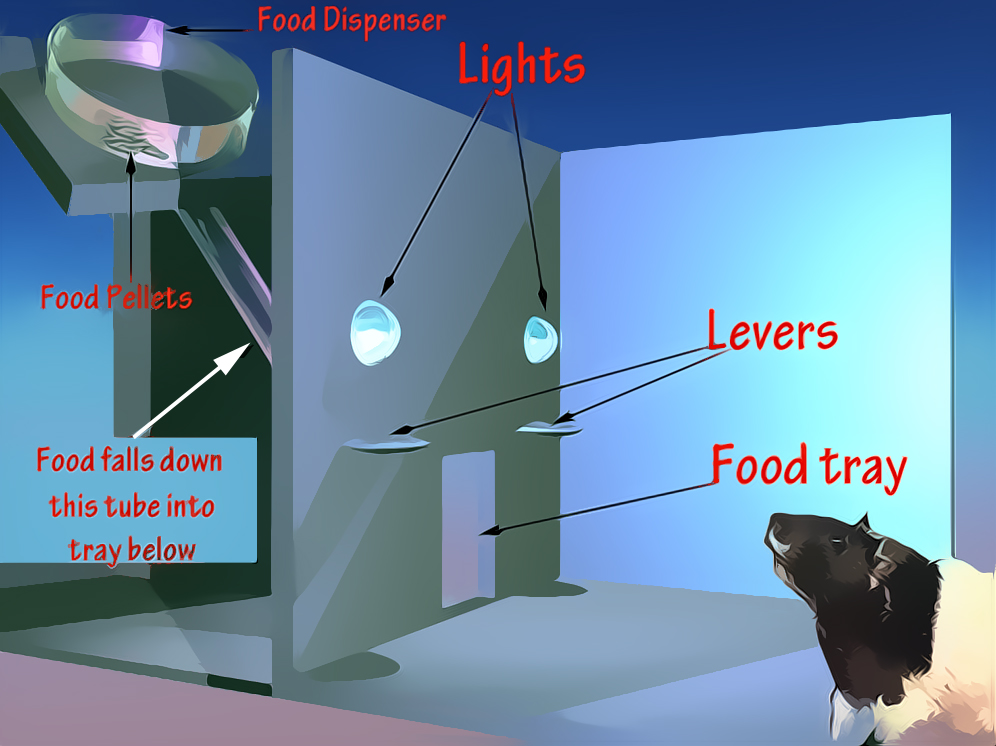

Since the goal of behaviour analysis is to uncover natural laws of behaviour, it makes sense to examine behaviour in an environment that can be controlled. That is to say, if you want to uncover laws that explain an organism's interaction with the environment, it makes sense to have some degree of control over the environment in which the organism is observed. For that reason a piece of apparatus called an 'operant chamber' was devised by B.F. Skinner; the apparatus is sometimes referred to as a 'Skinner box' (Fig. 6.1).

In a typical box that uses rats as subjects a food tray is situated on one wall, in between two levers. Various contingencies between lever pressing and food delivery can be programmed; more on this shortly. Above each lever there is usually a small light that can be switched on or off at various times. This light might be used in a number of ways.

For example, the light could be switched on above a lever if only that lever was programmed so that pressing it leads to food delivery. Alternatively, one schedule of reinforcement might be programmed for when the light is on and a different one programmed for when it is off, or when it is a different colour. Working with lights, or even sounds, permits an experimenter to examine how various other stimuli influence the behavioural stream.

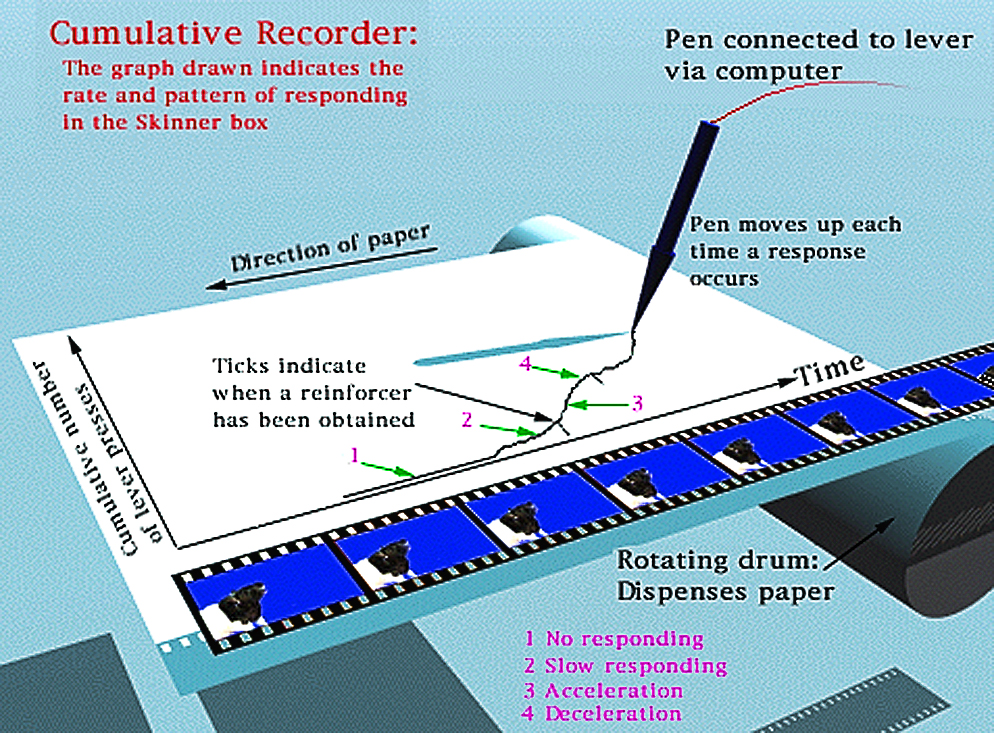

Regarding data collection, each lever is connected to a recording device via a computer. This recording device is called a cumulative recorder and it traces out on a piece of paper the cumulative number of lever presses that occur (Figure 6.2).

The paper comes off a rotating drum and moves from left to right at a constant speed. The shape of the graph that is traced on the paper provides a visual record of the rate of responding on the lever.

In Figure 6.2, for example, 4 different rates of responding are indicated. When the line drawn runs parallel to the X-axis this means there is no responding. With each lever press the pen steps up a notch on the Y-axis. The second rate shown indicates that responding is now interspersed with pauses, producing a low rate of responding. The third rate indicates that lever pressing has suddenly increased in frequency before slowing down to produce the fourth rate.

The value of this recording system is clearer when you examine some examples of performance on four basic schedules of reinforcement in Fig. 6.3.

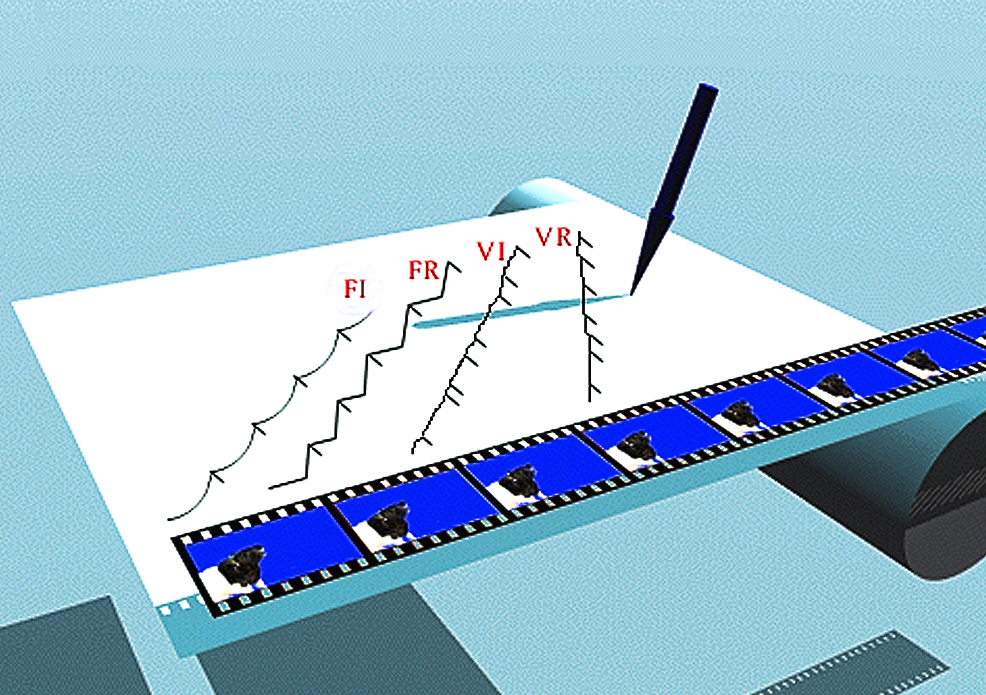

The schedule performances shown here include the Fixed Interval (FI), the Variable Interval (VI), the Fixed Ratio (FR), and the Variable Ratio (VR) schedules. The differences in their construction lie in the way in which relations are arranged between time, responses, and reinforcers. The FI and VI schedules directly control the times at which a single response can produce a reinforcer:

FI.....The first response after a fixed period of time has elapsed since the previous reinforcer produces a reinforcer immediately.

A simulation of the contingencies that make up the FI schedule are shown in Movie 6.1.

We see here that only if a lever press occurs after a fixed period of time is the reinforcer (S+) presented. From the cumulative record we see the kind of performance that appears as a result of this schedule of reinforcement. After each reinforcer deliver (indicated by the tick on the continuous line), there is a pause in responding that is then followed by an acceleration in responding up to the next reinforcer delivery.

This pattern is consistent across the whole session. A good example would be school lessons; a pupil getting up for recess is only reinforced once every hour at the end of each lesson. If a pupil gets up before the lesson is finished, this behaviour will not be reinforced by free time of play in the yard, in fact it may be ‘punished’ by the teacher’s reprimand to sit down again. After each recess, pupils will usually attend quite well for the next lesson, until it comes close to the end of the hour, when pupil behaviour will be come restless, i.e, they are ‘getting ready’ for the next recess.

What’s remarkable about the pattern of responding on any schedule of reinforcement is that it is so consistent and predictable; AND, let’s not forget that we are also dealing with ‘voluntary’ behaviour. This is not reflexive responding as in Pavlovian conditioning, but the behaviour of a freely moving organism.

The lesson here, then, is that the specialised contingencies associated with simple reinforcement schedules are able to guide the behavioural stream into recognisable patterns. The next obvious step is to ask “What happens when you ‘tweak’ the contingencies?” Well, the only way to answer that question is to do it and see what happens. This approach is called The Experimental Analysis of Behaviour and it uses inductive reasoning. This is what Skinner did in his experiments and his basic approach was to either vary time units or vary the number of responses needed to obtain the reinforcer.

The next schedule we’ll look at it is the VI schedule (Movie 6.2).

VI.....The first response after an average period of time has elapsed since the previous reinforcer produces a reinforcer immediately.

As we can see in Fig. 6.3 a different pattern of responding is observed. There is little pause after each response, in fact this schedule produces high rates of relatively stable responding.

The FR and VR schedules control the numbers of responses needed to produce a reinforcer:

FR.....There is a fixed ratio between number of response and number of reinforcers. Thus, a FR5 schedule for example, requires 5 responses to be made for each reinforcer (Movie 6.3).

VR.....There is a variable ratio between number of responses and number of reinforcers. Thus, a VR5 schedule requires an average of 5 responses to be made for each reinforcer (Movie 6.4).

The response patterns for each of the schedules described above are contained in Figure 6.3.

Further details on schedule performances

FI Schedule

Baseline performance on this schedule (i.e., the performance after extensive training) usually is characterised by a pause after reinforcer delivery followed by an acceleration in responding up to the moment of the next reinforcer; the size of the pause is proportional to the size of the interval between reinforcers. This pattern is referred to sometimes as 'scalloping'.

It is tempting to explain FI patterning, or any patterning obtained in the real world where events occur at regular intervals, by suggesting that it is caused by 'time estimation'. It might be said, for example, that an organism times is responding in anticipation of the next reinforcer.

However, whether or not anticipation occurs, the changes in an organism (e.g., the behaviour of anticipating the future) are properly viewed as dependent variables and not as independent variables. This conclusion highlights an important educational function of schedule research.

That is, it offers scientists a chance to contrast traditional ways of explaining behaviour with an analysis based on a description of how organisms adapt to environmental constraints. Traditionally it is argued that causes of behaviour (i.e., the independent variables of which behaviour is a function) are invariably found inside organisms, in the brain or in the 'mind'.

However, when viewed from a holistic perspective a different picture emerges. The interface between an organism and the environment (i.e., the behaviour that is observed) can be viewed as a dynamic system whose evolution is constrained by two sets of limitations; limitations of change imposed by the genetic and environmental history of the organism, and limitations imposed by the structure of the prevailing contingencies.

FR Schedule

At first sight it appears that FR schedules produce patterns of behaviour similar to those found on FI schedules; look at the cumulative records in Fig. 6.3.

However, as you will have already noticed, the dynamics on this schedule are very different to those on a FI schedule. Pausing, for example, has a different function. It actually delays the time when the reinforcer will be delivered. Indeed, it is even found that as the FR size increases the average duration of the post-reinforcement pause (PRP) also increases.

It is not always a good idea to look for direct examples of any simple schedule of reinforcement in the real world. Because there are so many other more complicated schedules that could be studied, looking for evidence of a simple schedule can give the wrong impression that the study of schedules is concerned with trivial instances of behaviour.

That being said, though, many students feel the need to anchor their understanding of events in the laboratory with events in the real world. As an exercise, then, find examples in the real world where a fixed number of behaviours are needed before a particular event occurs. You might look at the relation between doing certain kinds of jobs and getting paid (e.g., working on an assembly line), or simply getting a job done (e.g., winding up a clock). You might look also for situations where the FR value changes from a value of '1' in the initial stages of learning a skill to some other value thereafter.

For example, good teachers initially use continuous reinforcement (FR 1) in the form of praise and feedback when a student is learning but later on, once the new skill is mastered, less frequent reinforcement may suffice to maintain performance.

VR Schedule

On this schedule rate of responding affects the rate of reinforcement. Like the FR schedule, this schedule produces patterns of behaviour that include pauses. Here, though, the pauses are briefer and occur more randomly among responses.

When the VR value is quite low (e.g., VR 10) there are few PRPs and a relatively high response rate; but this rate decreases to a sporadic low rate if the rate of reinforcement is thinned by increasing the VR value (e.g., VR 80).

Numerous activities in the real world can be viewed as being controlled by VR schedules. These include fishing, selling things door to door, playing games, gambling, receiving compliments for work.

A point made earlier is worth mentioning again in this context. When you observe someone engaged in an activity, that observation is only one element in a pattern of behaviour that has been shaped by the various schedules in operation. This realisation radically affects any discussion on the causes of behaviour.

Thus, instead of focusing on an immediately preceding event as the ‘cause of a behaviour’, you must look at the overall pattern of behaviour of which the immediate observation is but a part.

As an exercise to demonstrate this point, select a behaviour you have identified to be controlled by a specific schedule and ask a friend to explain this behaviour; of course, your friend should not be familiar with principles of behaviour.

During your conversation make notes on where the causes of the behaviour are located by your friend. Does your friend, for example, refer to intentions, desires, feelings, needs, drives, a 'maybe next time' attitude, or other words that refer to events occurring inside an organism to explain why behaviour is persistent in the absence of consistent consequences?

Once you have clarified the way in which s/he formulates the explanation, suggest an imaginary experiment: ask him/her to imagine what would happen to the behaviour if you could wave a magic wand to make it more likely that consequences would reliably follow the behaviour.

In effect you will be suggesting to make an alteration to the schedule parameters to examine the effects on behaviour. That is, you will be suggesting a change in some aspect of the independent variable in order to change the dependent variable, the behaviour. When you do this you will see that your friend stays within their original framework that was used for explaining the behaviour. In so doing, your friend will give you a chance to see how the mistake of mentalism interferes with a scientific analysis of behaviour.

VI Schedule

This schedule produces a relatively stable rate of responding with minimal pausing after each reinforcer. To a certain extent rate of responding does not affect rate of reinforcer delivery, though, of course, reinforcers will not be delivered if responding stops.

This is because increases in rate of responding cannot shorten the pre-programmed times when reinforcers are made available.

The behaviour produced by this schedule makes it ideally suited for examining other phenomena. For example, responding that is reinforced in the presence of some stimulus changes when a property of that stimulus is changed. This change in responding is orderly and the extent of it is dependent on how much the stimulus has changed.

The phenomenon demonstrated by such a procedure is called 'generalisation': the effects of reinforcement during a stimulus spreads to other stimuli that differ from that stimulus along some dimension; the further away from the initial stimulus condition, the greater the effect. Supposing, for example, responding is reinforced in the presence of a tone with a certain frequency.

When responding is examined in the presence of other sound frequencies it is found that the rate decreases the further you move away from this tone, using either lower or higher frequencies. Thus, even though responding was not trained in the presence of these new frequencies, it still occurs but to a lesser degree.